Nintendo Tetris AI Revisited

[ About | Videos | Download | Run | Details | References ]

Previously, I programmed a plugin that procedurally propels the perpetual procession of plummeting pieces presented to the player in the puzzle pastime Tetris. But, my efforts had some shortcomings:

This article describes my upgraded bot that plays Nintendo Tetris without disabling gravity. It assesses and manages risk while aggressively aiming for max outs under high drop speed.

Watch the bot max out Nintendo Tetris starting from level 19 in all the videos below.

The .zip contains:

Note, the bot is designed for level 19 and above only. It is not able to control the pieces in the lower levels.

To watch it play faster, select Machine | Speed | Max.

Below level 10, the drop speed of each level is slightly faster than the one before it. But, for 10 and above, there are several plateaus where the speed remains constant across multiple levels. This is a consequence of how the drop mechanism works. The speed is represented as frames per drop, which is an integer value. That left few options for the higher levels: 10–12, 13–15, 16–18, 19–28 and 29+ are 5, 4, 3, 2, and 1 frames/drop, respectively.

The bot was designed to tackle the 19–28 plateau only. On even frames, it asserts either Left, Right, A, B or nothing on the gamepad. And, on odd frames, it let’s the automatic drop happen without asserting any of the buttons. The game does not seem to accept horizontal movement that coincides with rotation; so, each button is asserted independently on an even frame.

Unlike the masters playing on the higher levels, the bot does not take advantage of the Delayed Auto Shift (DAS), a.k.a. autorepeat, and the associated techniques to keep it charged. It’s more like Thor Aackerlund’s vibrating thumb technique. But, it pushes the vibration to the theoretical maximum that the game can handle.

Players are rewarded with 40, 100, 300 and 1200 points for Single, Double, Triple and Tetris clears, respectively. And, the point values are multiplied by the level number plus 1. In other words, to achieve a high score, the player should strive for the maximum number of Tetrises while playing at the highest levels for as long as possible.

19 happens to be the highest starting level, enabling the bot to jump right into the 19–28 plateau. But, due to a bug in the level counting mechanism, the game will advance to level 20 on 140 line clears, instead of the expected 200. From there, it will advance one level every 10 lines. And, when it reaches 230, the bot will graduate from the plateau and quickly succumb. In summary, it needs to achieve as many Tetrises as possible before clearing 230 lines.

Soft drops can also potentially increase the score. To gain points, the piece needs to be soft dropped all the way down until it locks into the playfield. Any brief soft drop that might occur along the way in positioning the piece will not contribute to the score. If successful, the player will gain 1 point for every row crossed during the soft drop. And, the resultant value is not multiplied by the level number even if the soft drop clears lines.

Soft dropping only has a marginal effect on the overall score. Nonetheless, whenever possible, the bot will complete a placement by asserting Down on the gamepad to gain those few extra points. In rare cases, it might mean the difference between a very high score and a max out.

When a piece spawns, the bot examines every possible placement of the current piece and the next piece. A valid placement is a position in which a piece is supported either by solid cells or by the playfield floor. It can be reached from the spawn location through a sequence of horizontal movements, rotations and drops. The valid placements and their pathway sequences are found using breadth-first search (see Searcher).

Placing a piece into the playfield has consequences: 4 empty cells become solid cells and any completed lines will be cleared, dropping the rows above. For each valid placement of the current piece and its associated playfield consequences, the bot tries each valid placement of the next piece, evaluating the combined consequences. This chain of searches is represented by SearchChain.

Each of the combined consequences is fed into an evaluation function, which scores the contents of the playfield. The combo with the lowest score wins out and the current piece is placed accordingly. The results of the search chain only affect the current piece. When the next piece spawns, it is evaluated with the next-next piece and so on.

The evaluation function is a weighted sum of the following factors:

The Particle Swarm Optimization (PSO) variant described in reference [1] was used to find the weights for the evaluation function. The suggested inertia and acceleration coefficients were applied to achieve good convergent behavior. And, maximum particle step sizes were established by clamping their velocity magnitudes.

During each iteration, the particles were evaluated in parallel to fully exploit the available computational resources. In addition, after convergence was detected (no advancement after a certain number of iterations), the PSO was setup to automatically restart with a new set of randomly selected weights, enabling it to explore more of the search space.

Each particle’s position vector (it’s weights) were evaluated by simulating 100 runs of the 19–28 plateau. A full run means 230 line clears, but many end in a top out. The scores of the runs are sorted and the particle’s evaluation is defined as the average of the best 33 out of the 100 runs. The idea is to select for aggression. By focusing exclusively on the upper-third, the particles only experience and become accustomed to favorable piece sequences, limiting the need to act conservatively. As a result, they tend to push a typical game to the brink, hanging on just long enough for that next long bar.

The piece sequences for the 100 runs were generate prior to PSO execution and the same sequences were used over and over again. This was necessary to fix the search space, to make the candidate solutions comparable to each other. The sequences were produced by employing the logic of the actual Nintendo Tetris PRNG, which was designed to reduce the odds of consecutive duplicates. But, the PRNG is also flawed: it does not pick pieces uniformly.

Initial attempts produced bots that were way too aggressive. If they made it through the 19–28 plateau, they would usually max out the score. But, unfortunately, they often just topped out early. In response, four things were done to pacify the bots:

With the pacification rules in place, PSO provided the following weights:

| Factor | Weight |

|---|---|

| Total Lines Cleared | 0.286127095297893900 |

| Total Lock Height | 1.701233676909959200 |

| Total Well Cells | 0.711304230768307700 |

| Total Deep Wells | 0.910665415998680400 |

| Total Column Holes | 1.879338064244357000 |

| Total Weighted Column Holes | 2.168463848297177000 |

| Total Column Hole Depths | −0.265587111961757270 |

| Min Column Hole Depth | 0.289886584949610500 |

| Max Column Hole Depth | 0.362361055261181730 |

| Total Column Transitions | −0.028668795795469625 |

| Total Row Transitions | 0.874179981113233100 |

| Total Column Heights | −0.507409683144361900 |

| Pile Height | −2.148676202831281000 |

| Column Height Spread | −1.187558540281141700 |

| Total Solid Cells | −2.645656132241128000 |

| Total Weighted Solid Cells | 0.242043416268706620 |

| Column Height Variance | 0.287838126164431440 |

Since the search chain hunts for the combination that minimizes the evaluation function, factors paired with positive weights can be viewed as bonuses and the remainder can be viewed as penalties. But, the magnitudes of the weights do not necessarily indicate the significance of their associated factors; they are not normalized in some way that makes them comparable.

To estimate the strength of the AI, scores were collected across ~1.7 million simulated runs of the 19–28 plateau. The scores do not reflect any play into level 29 or above, and they omit points gained from soft drops. But, they do include games that ended prematurely in a top out. The Nintendo Tetris PRNG logic was used to generate the Tetrimino sequences for the simulated runs.

Within these results, the maximum score is 1,313,600. And, the minimum is 0.

The mean score is 816,379, which sounds low. But, as you’ll see below, the data is skewed such that the median score, 989,200, provides a much better idea of a typical value.

As discussed earlier, the PSO optimized the weights based on the average of the best third of its runs. In this case the average score of the best third is 1,108,860. In fact, the average score of the top 75% is 1,000,000.

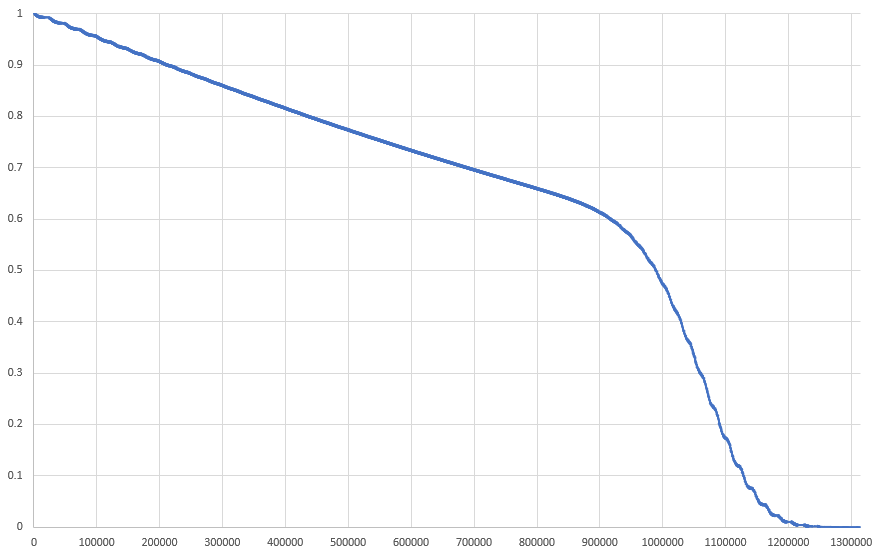

The bot has a probability of 47% of maxing out the score prior to level 29. It has a probability of 61% of obtaining at least 900,000 points prior to level 29. In fact, the chart below provides the odds of attaining any particular score prior to level 29.

The probability appears to drop linearly up until about 900,000 points. Then, it transitions to an inverted sigmoid curve.

Below is a smoothed-out histogram showing the number of runs for each attained score. It’s shape is determined by the derivative of the plot above it.

Ignoring the wobble, it’s flat up until about 900,000 and then it transitions to a normal distribution centered around 1,050,000 points. The exact source of the wobble is unclear. It seems to suggest that scores prefer to jump in units of 20,000 points. It might be related to the cycle of stack building and achieving Tetrises.

The plugin uses the Nintaco API to manipulate CPU Memory, to assert gamepad buttons and to receive frame rendered events. All memory addresses were discovered through exploration with the Nintaco Debugging Tools and the information has been added to the Data Crystal ROMhacking.net wiki. In the source, they appear as constants within the Addresses interface.

Copyright © 2018 meatfighter.com

This project is free software; you can redistribute it and/or modify it under the terms of LGPLv2.1.